CHROME: Concurrency-Aware Holistic Cache Management Framework with Online Reinforcement Learning

Published in The 30th IEEE International Symposium on High-Performance Computer Architecture (HPCA 2024), 2024

Xiaoyang Lu, Hamed Najafi, Jason Liu, Xian-He Sun

Background

As data-intensive workloads continue to grow, cache management techniques—such as replacement, bypassing, and prefetching—are essential but are often treated independently. CHROME integrates these techniques using an online reinforcement learning framework to achieve a cohesive cache management strategy.

Design

CHROME aims to bridge the gap between separate cache management techniques by leveraging reinforcement learning to optimize cache actions dynamically. By monitoring both data locality and concurrency, CHROME adapts to diverse workloads and system configurations in real time.

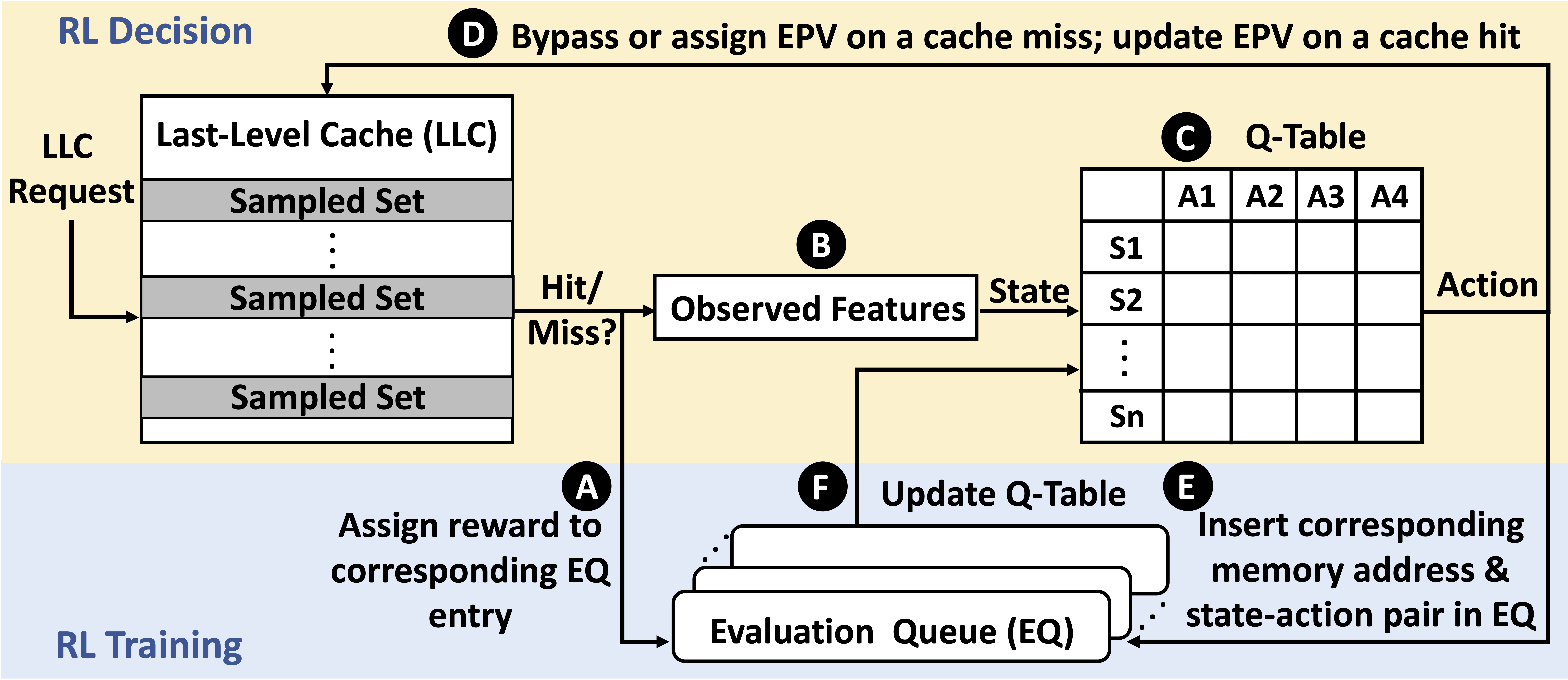

Overview of CHROME Design

Key Features

- Holistic Cache Management: Combines cache replacement, bypassing, and prefetching to optimize cache usage.

- Online Reinforcement Learning: Employs reinforcement learning to continually adjust cache decisions based on observed workload characteristics, improving adaptability.

- Concurrency-Aware System Feedback: Provides CHROME with concurrency-aware system-level feedback, enhancing its ability to make accurate cache management decisions.

Results

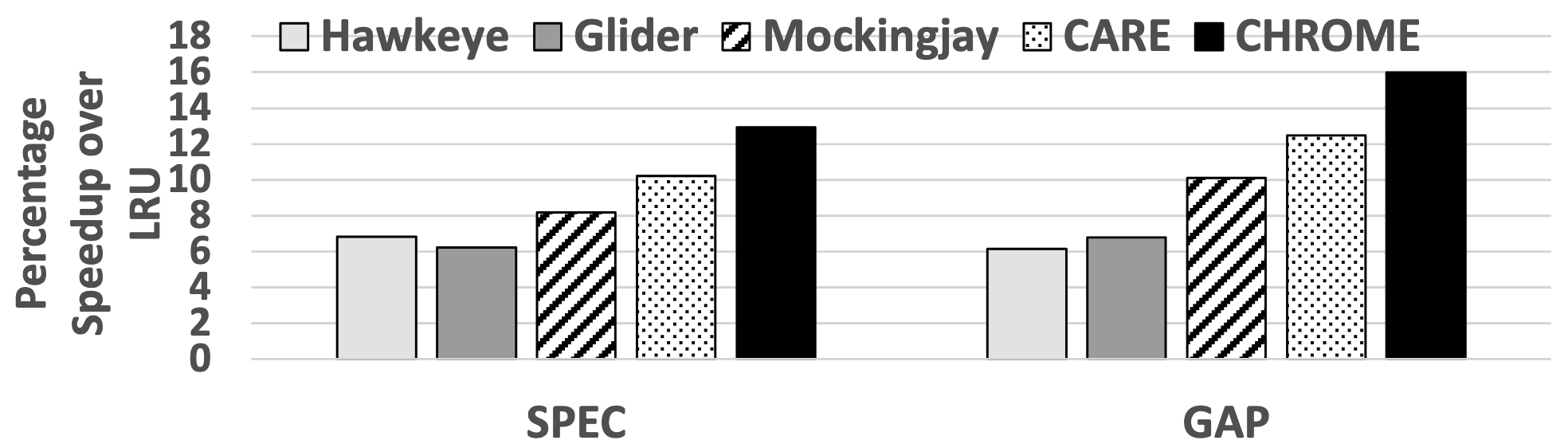

CHROME outperforms existing cache management schemes, achieving a 13.7% performance improvement over the LRU baseline in 16-core systems and consistently surpassing competing frameworks in diverse workloads.

Performance comparison among Hawkeye, Glider, Mockingjay, CARE, and CHROME

Conclusion

CHROME’s holistic and adaptive approach demonstrates the benefits of combining multiple cache management strategies through reinforcement learning, underscoring its potential as a versatile solution for high-performance computing systems.