CARE: A Concurrency-Aware Enhanced Lightweight Cache Management Framework

Published in The 29th IEEE International Symposium on High-Performance Computer Architecture (HPCA 2023), 2024

Xiaoyang Lu, Rujia Wang, Xian-He Sun

Background

While data access concurrency is a common feature in multi-core systems, traditional cache management policies focus primarily on data locality, often neglecting the optimization potential that concurrent accesses offer. This oversight limits cache performance improvements in data-intensive applications.

Design

To improve cache efficiency in multi-core systems, there is a need for cache management that considers both data locality and concurrent access patterns. By reducing cache misses that incur high penalties in concurrent scenarios, overall performance can be enhanced, addressing the limitations of locality-only cache policies.

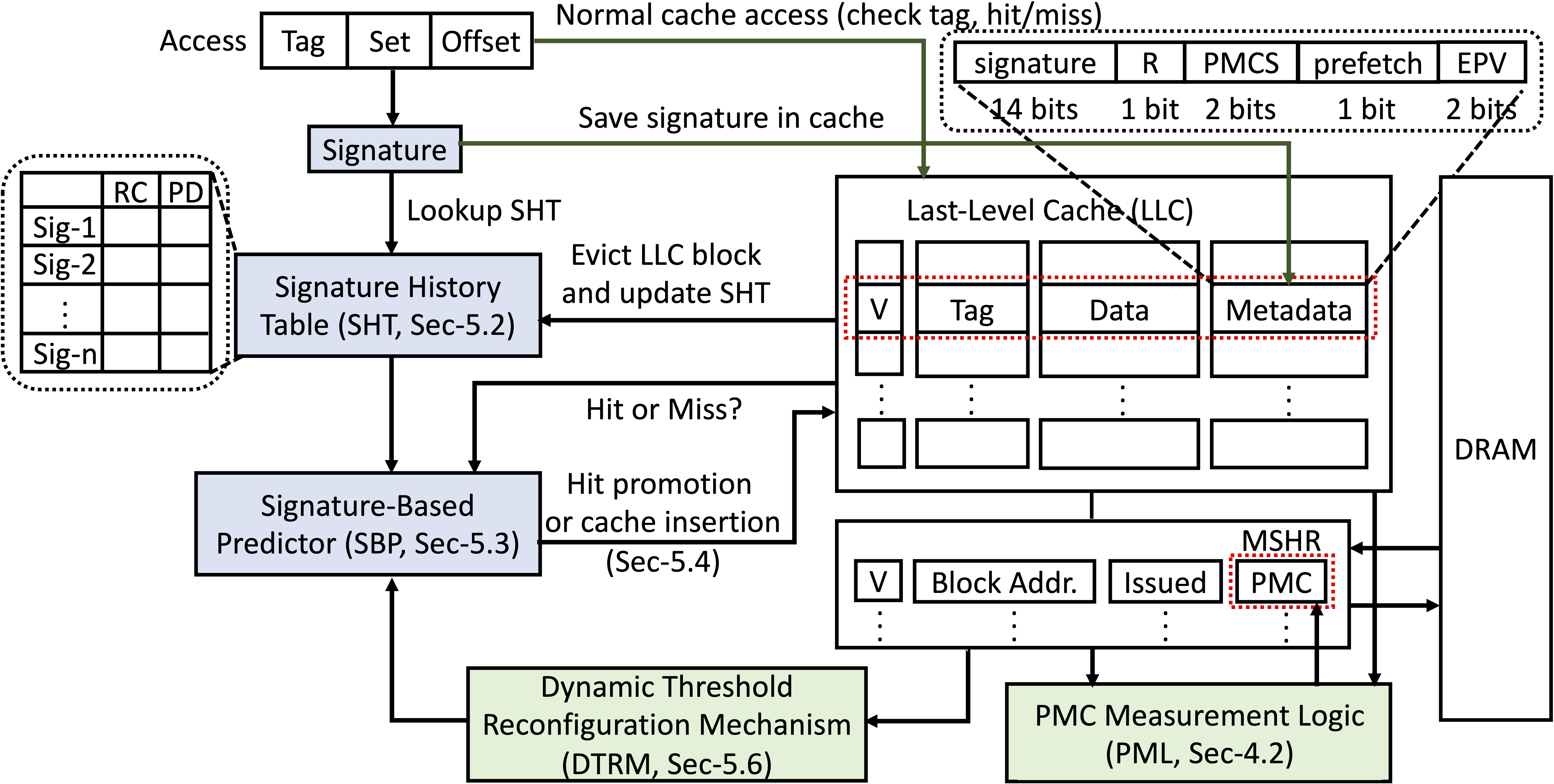

Overview of CARE Design

Key Features

- Pure Miss Contribution (PMC): A metric that quantifies the performance impact of cache misses, especially under concurrent access conditions, allowing CARE to prioritize high-cost misses for replacement.

- Dynamic Threshold Reconfiguration Mechanism (DTRM): CARE dynamically adjusts cache management decisions based on PMC, aligning cache behavior with varying workload demands for enhanced efficiency.

Results

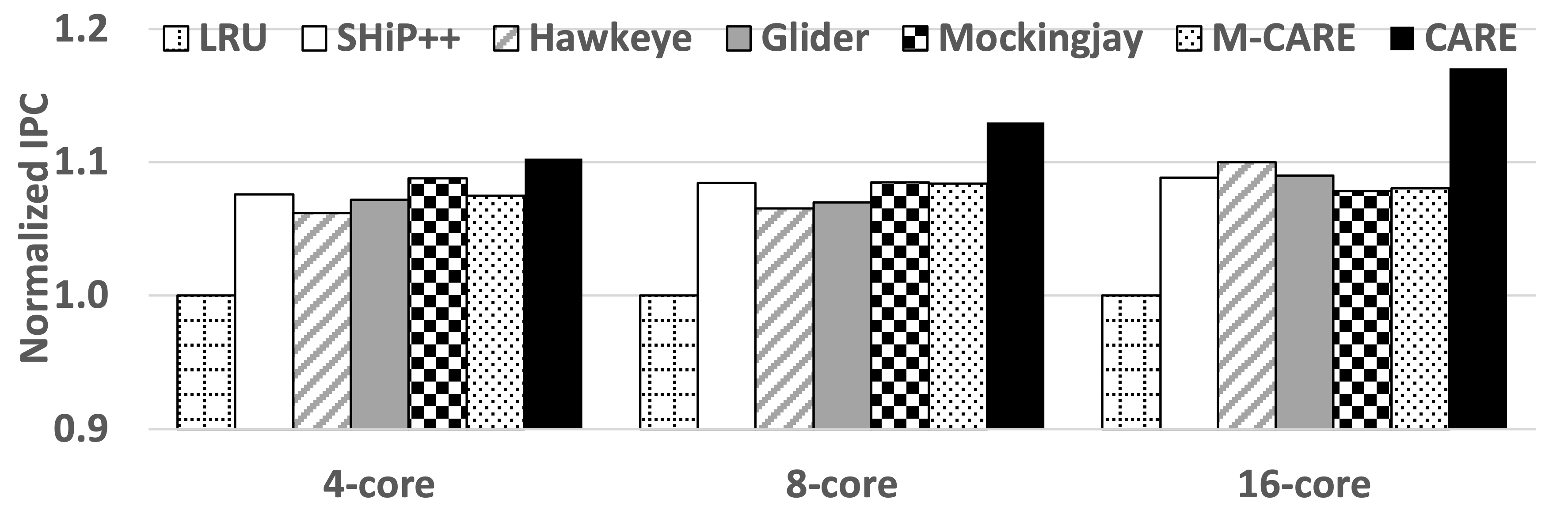

CARE achieves substantial improvements over traditional LRU policies, with an average 10.3% IPC gain in 4-core systems and up to 17.1% IPC improvement in 16-core systems. These results demonstrate CARE’s scalability and effectiveness in high-concurrency environments.

Performance comparison among LRU, SHiP++, Hawkeye, Glider, Mockingjay, M-CARE, and CARE

Conclusion

By integrating concurrency-aware metrics and adaptive mechanisms, CARE provides a robust approach to improving cache efficiency in multi-core systems, addressing both locality and concurrency to mitigate the Memory Wall problem.